Artificial Intelligence has progressively become embedded in more and more aspects of our lives, and sports are no exception. For this reason, it is crucial for managers to be aware of its applications, the implications it will have on the industry, and the opportunities and challenges that lie ahead.

This article is a concise adaptation of my Master's Thesis, titled "Game-Changer: How Machine Learning Techniques are Taking Sports Performance to New Heights, and How Managers Can Leverage Them." It aims to provide a high-level qualitative overview of the evolution trajectory of Machine Learning (ML) techniques in the sports domain.

The goal of this study is to provide answers to three main research questions:

1. How are ML solutions pervading and transforming the sports industry?

2. What developments can we expect to see in the near future?

3. What challenges will managers need to face?

To do so, the methodology of the work involves:

1. A technical analysis of AI applications in sports

2. A case study on the evolution of chess algorithms

3. An overview of the current industry trends

Before performing the analysis, a literature review was carried out to identify the state of the art in this field. This process highlighted a lack of meta-analyses and systematic reviews, with the main one being five years old, and a scarce presence of narrative reviews.

Concerning the case study, the four breakthrough papers that were used are:

1. Shannon (1950), which introduced the first heuristic approach to chess algorithms, using an N-steps lookahead strategy to maximize an evaluation function through the minimax algorithm.

2. Campbell et al. (2002), which presented Deep Blue, the computer chess system that was able to beat world champion Garry Kasparov thanks to efficient parallel search and cutting-edge hardware.

3. Silver et al. (2017), which contains the first display of AlphaZero, the first chess-playing algorithm trained purely by Reinforcement Learning through self-play, surpassing in performance all existing algorithms.

4. McGrath et al. (2022), where the learning pattern of AlphaZero is compared to that of humans, in a first attempt to improve the explainability of the model.

Before diving into the case study, it is key to understand how different types of ML algorithms are currently used in the sports domain. First, Supervised Learning is the most used category and is already employed, for example, to offer tailored training plans to athletes with the help of a labelled historic dataset. Unsupervised learning is used in object detection like Hawk-Eye: these algorithms can identify and track moving objects without the need for a labelled training dataset. Interest in semi-supervised learning is on the rise, thanks to the opportunities arising from Transfer Learning to generate strong performance with a smaller amount of data. Think of AI assistants that are pre-trained on Natural Language and then fine-tuned for a specific task. Lastly, Reinforcement Learning is getting more attention in recent years, thanks to its successful applications to sports strategy, from motorsport to football.

The choice of chess as a case study comes from its potential to predict what will happen in other sports, as it has always represented a testing ground for AI due to it being easier to model. Let‘s start the case study by understanding how chess algorithms have been developed over six decades: the heuristic approach involves defining an evaluation function that computes a player’s advantage or disadvantage in a position. This function takes into account material (the result of the pieces captured by each side) and position (the impact of available moves, square control, castling possibility, and such). Then, this formula is used to evaluate all the available positions to the player, compute all the answers the opponent has for each of them, and so on. The exponential nature of computational costs in this n-step lookahead technique represents an important limitation, considering the number of possible games (or paths in the tree) has been estimated to be above 10^120. Despite this, IBM’s Deep Blue was able to defeat Kasparov with this Minimax algorithm, thanks to outstanding hardware improvements, a more sophisticated evaluation function, and a more efficient tree search.

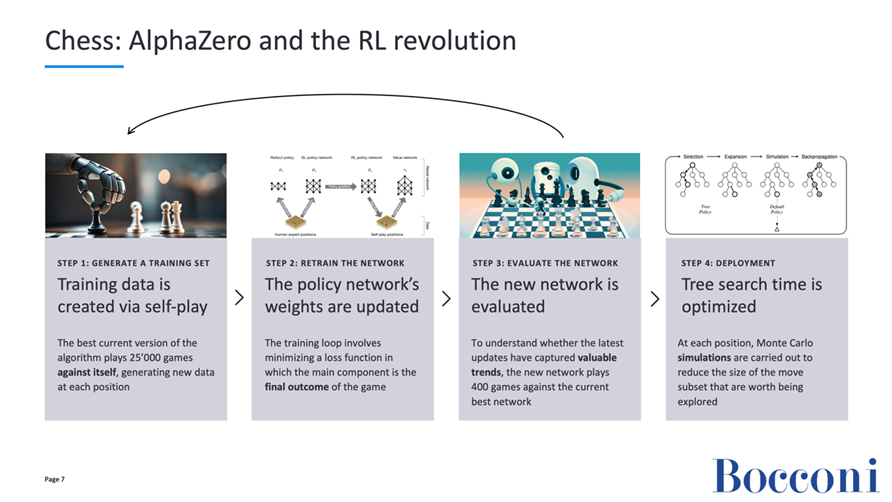

The real revolution took place in 2017 with Google DeepMind’s release of AlphaZero, a chess algorithm that learned to play without any prior knowledge of chess and without any human-played games in its training dataset. At its release, AlphaZero was far stronger and faster than any other algorithm, sparking a wave of innovation in chess systems and pro-level play. At the core of this technology are two neural networks: a value network to compute the position evaluations and a policy network to identify the optimal moves. The system generates new data via self-play, then updates the policy network weights by minimizing a loss function that depends on the outcome of the game, and finally tests these changes against the previous best version of the network. This process is iterative, and AlphaZero played 44 million games against itself in nine hours of training. The move selection strategy, which is used both during self-play and during deployment, uses a Monte Carlo Tree Search strategy, which employs Monte Carlo simulations that allow it to balance exploration and exploitation in the search for optimal moves.

The impressive results of this technology opened up a range of new possibilities and questions, such as:

· Can we drive novel insights in pro-level play by breaking down the network’s predictions into human-understandable concepts?

· Would we get even better performance by merging human and machine knowledge?

· Will we be able to fine-tune models to emulate the style of a player, making it an incredibly powerful training support tool?

· Is it possible to embed human knowledge in a way that allows for the creation of AI-powered coaches that recognize human ideas, spot mistakes, and explain them in a human-understandable way?

Following its outstanding results in chess algorithms, DeepMind widened its scope to other sports. For example, we can examine at TacticAI, a football tactics assistant released in March 2024, currently specialized in set piece strategy. It has been validated in collaboration with five experts from Liverpool FC (three data scientists, one video analyst, and one coaching assistant) and its purpose is to evaluate a team’s current tactics and refine them using Graph Neural Networks (GNNs). Furthermore, TacticAI offers simulations on which player will receive the ball and whether they are going to take a shot, enhancing the model’s explainability. Moreover, the model’s ability to encode data opens the door to powerful retrieval applications. Below are a few examples of the model’s output, with the constructive adjustments it suggests in yellow.

This extraordinary technological development poses novel challenges for managers who will deal with AI projects in the sports industry. This work highlights six issues and presents a solution to each one. First, data privacy concerns and the population’s growing distrust in AI require managers to ensure these projects align with the company’s strategy and are communicated transparently, keeping in mind the impacts they will have on the workforce. Second, data quality is still an issue in sport biometrics, and the scarce availability of high-quality data can widen the gap between teams. Working with federations will be key to ensuring a fair ruling that favours competition, as successful examples can be found in MLB or MotoGP. Then, the slowdown of the open-source wave is putting companies in an uncertain situation, since the tools they rely on might become closed-source in the future. Make-or-buy (or partner) decisions are critical, and the cost volatility of software needs careful consideration.

Next, the biggest blocker of AI adoption by practitioners is the loss of control they perceive. To mitigate this, managers should always start with the end-user in mind and work backwards to provide them maximum value, instead of pursuing “innovation for the sake of innovation.” AI explainability can help increase trust in these tools. Then, there are the budget issues that afflict smaller sports, where funds are often a constraint to innovation. Exploring transfer learning and data-sharing opportunities between sports that share similarities (for instance, two racket sports) may lead to the generalization of technological results while keeping costs affordable. Lastly, the evolving nature of sports means that ML models are at constant risk of concept drifting: if a structural component of the sport changes, say a change in regulations or in the specs of the tools used, the underlying data distribution will change as well, possibly causing a steep decrease in performance. Keeping a tight feedback loop in models’ production and performing periodical tests against other top alternatives can mitigate this constant risk.

In conclusion, here are the limits of the work and some possibilities for future research. The limits include the qualitative nature of the research, a lack of comparative studies, and high uncertainty in the legal and competitive scenario around AI. The research opportunities relate to topics we touched upon: AI governance and how it can be measured, sentiment analysis on sports applications of AI, and exploration of transfer learning opportunities.

Giocare su Vave demo zeus vs hades significa accedere a un casinò online innovativo e affidabile. La piattaforma offre un'ampia gamma di giochi, dalle slot più popolari ai classici da tavolo. Il servizio clienti è sempre disponibile per assistenza, rendendo l’esperienza di gioco ancora più piacevole. Scopri tutto il divertimento che ti aspetta!